Why real expertise, not content volume, is what AI uses to decide who gets trusted and cited over time.

You have 10,000 pages of content. Perfect technical SEO. Fast load times. Clean schema. And AI never cites you. Why? Because there’s no human behind it. No real expert. No verifiable credentials. No first-hand experience. Just content for content’s sake.

Prompt engineering won’t save you if you don’t know what you’re talking about. AI amplifies expertise. It doesn’t create it. And the companies trying to scale content without actual domain knowledge are about to learn that the hard way.

AI knows you’re faking it.

The generic author bio. The AI-written content with no real insights. The manufactured credentials. The 200-word product descriptions that say nothing. The anonymous “Marketing Team” bylines. The thin content is published at scale with zero expertise behind it.

AI systems are filtering out fake expertise faster than you can publish it. And the companies that built their visibility on content mills and anonymous authors are about to disappear.

Last week, a company showed me their content strategy. They were proud of it.

1,000 product pages. All AI-generated. Every description is precisely 200 words. Zero authors listed. Zero credentials displayed. Zero first-hand experience. Zero unique insights. Just templated content that could describe anything.

“We spent $40,000 on this,” they told me.

I ran a “site:domain.com” search. 1,000 pages created. 120 pages indexed. 18 pages ranking for anything.

ROI: They paid $2,222 per indexed page. And most of those won’t drive a single visitor.

Two months ago, I reviewed a very different site.

Fewer than 60 pages total. No AI-generated content at scale. Every article written or reviewed by a named practitioner with visible credentials and real-world experience. Case examples were specific. Opinions were opinionated. Mistakes were acknowledged. Trade-offs were explained.

Nothing flashy. No volume play.

That site is now being cited consistently by AI Overviews and referenced by ChatGPT for multiple commercial queries. Not because it published more. Because AI could clearly see who was behind the content, what they had done, and why their perspective was worth trusting.

Same algorithms. Same tools. Completely different outcome.

This is the eighth article in the “AI Traps: Build the Base or Bust” series. We have covered technical foundations, category pages, product schema, citations, reviews, product syndication, and local SEO. All of that works. But only if there is a human behind it that AI can verify and trust.

Because the era of anonymous, thin, AI-generated content is over, and the companies still trying to scale it are about to learn what invisible actually means.

The E-E-A-T Framework: What AI Actually Looks For.

Before we dive into why your content is failing, let’s clarify what AI systems are evaluating.

E-E-A-T stands for Experience, Expertise, Authoritativeness, and Trustworthiness. It comes from Google’s Search Quality Rater Guidelines, the document Google uses to train human evaluators on what high-quality content looks like.

Here is what each component means:

- Experience: First-hand, practical experience with the topic. “I tested this product for six months” beats “This product is popular.” “In my 15 years managing enterprise infrastructure” beats “Many IT professionals believe.”

- Expertise: Deep knowledge and demonstrated skill in the subject matter. Medical advice from an MD. Legal guidance from a JD. Technical SEO strategy from someone who has actually executed it at scale.

- Authoritativeness: Recognition as a go-to source in your field. You are cited by others. You speak at industry conferences. You contribute to trade publications. Other experts reference your work.

- Trustworthiness: Reliability, accuracy, transparency, and verifiable information. Your claims can be fact-checked. Your credentials are real. Your website is secure. Your business is legitimate.

Why This Matters for AI:

Google’s Quality Rater Guidelines train human evaluators. Those evaluations train AI models. AI models now automatically detect E-E-A-T signals.

When ChatGPT decides which sources to cite, when Google’s AI Overviews choose which content to summarize, and when Perplexity determines which websites to reference, they are looking for E-E-A-T signals.

Not perfectly. Not always accurately. But consistently enough that content lacking these signals gets filtered out.

And here is the uncomfortable truth: you cannot fake E-E-A-T with technical tricks.

You cannot fake it with schema markup. You cannot fake it with AI-generated content. You cannot fake it with purchased backlinks or manufactured social proof.

You actually have to be credible. You actually have to know what you are talking about. You actually have to demonstrate expertise that AI can verify across multiple sources.

Most companies are not prepared for this reality.

The Thin Content Paradox: Why Your 200-Word Pages Will Never Rank.

Here is the pattern I see everywhere:

The company publishes 500 product pages. Each page has 150 to 200 words of generic text. “Welcome to our [Product Category]. We have great [products]. Browse below to find the perfect solution for your needs.”

Zero depth. Zero insights. Zero value beyond the product grid.

Then they wonder why Google won’t index 400 of those pages.

Let me show you the math:

500 pages created. 300 pages crawled (the rest blocked or not discovered). 150 pages indexed (the rest excluded as “low quality” or “duplicate content”). 20 pages actually rank for anything.

ROI: 4% of your effort produces results.

This is the thin content paradox:

- Companies want minimal effort (200 words anyone can write), maximum results (top 3 rankings), massive scale (500+ pages), and no expertise required (junior writers or AI can handle it).

- AI systems reward the opposite: substantial content (800 to 2,000 words depending on complexity), deep expertise (written by practitioners who actually know the subject), quality over quantity (10 great pages beat 100 thin ones), and demonstrable authority (real humans with real credentials).

You can’t have both.

What “Thin Content” Actually Means:

This is not just about word count, though that matters. Thin content is:

- Lack of depth. Surface-level information that anyone could write without expertise. Generic product descriptions. Obvious advice. Regurgitated common knowledge.

- No unique insights. Everything on the page could be found on 50 competitor sites. No original research. No case studies. No specific examples from experience.

- No first-hand experience. Generic descriptions with no practitioner knowledge. “This product is great for professionals” instead of “After testing this with 30 enterprise clients, we found it works best for teams under 50 people because…”

- No expertise signals. Anonymous author. No credentials displayed. No proof that the writer has any authority on the topic.

- AI-generated without editing. Obvious template content. Every page follows the same structure with different keywords plugged in. No human touch. No personality. No specific details, only an expert would know.

The AI Detection Layer:

AI does not just look at word count. It looks at information density, specificity, experience markers, unique data points, and depth of explanation.

- Information density: Are you saying something new or just filling space with obvious statements?

- Specificity: Generic terms (“many professionals,” “industry experts,” “best practices”) versus precise practitioner language (“in enterprise deployments over 10,000 users,” “when implementing OAuth 2.0 with PKCE,” “based on analysis of 500 customer support tickets”).

- Experience markers: “In my 15 years optimizing enterprise e-commerce platforms” versus “Many SEO professionals believe that optimization is important.”

- Unique data points: Original statistics, case studies with real numbers, specific examples from your work, insights you discovered through direct experience.

- Depth of explanation: Does this answer the question completely, or does it provide a surface-level overview that forces users to search elsewhere for real answers?

Why Thin Content Fails in the Age of AI:

Google’s helpful content system explicitly looks for content that demonstrates first-hand experience and expertise. If your content could have been written by someone with no experience in the field, it gets filtered.

When someone asks ChatGPT or Perplexity a question, these systems prioritize sources that provide comprehensive, detailed answers from credible experts. Thin content does not get cited.

When Google decides which pages to index, it evaluates whether the page adds unique value to its index, if the page is just a thin wrapper around a product grid, Google often chooses not to index it.

The Fix (That Nobody Wants to Hear):

Write less content, but make it better.

50 comprehensive pages written by real experts beat 500 thin pages written by junior writers or AI with no oversight.

Invest in practitioners who actually know the subject. Let them write from experience. Encourage specific examples, case studies, and detailed explanations.

Add depth. If you are writing about “water heater installation,” do not just say “we install water heaters professionally.” Explain the difference between tankless and traditional units, the factors that determine installation complexity, the common mistakes homeowners make when choosing a unit, and the questions to ask before hiring an installer.

Show your work. Do not just give conclusions. Explain how you arrived at them. Share data. Reference specific projects or client situations.

Or Accept Reality:

Thin content will not rank. AI will not cite it. Google will not prioritize indexing it. Your competitors with depth will dominate while you wonder why your 500-page content investment produced zero ROI.

And this ties directly to E-E-A-T. Because thin content, by definition, lacks demonstrated expertise, first-hand experience, and author authority.

Entity Building: Making Your Brand Recognizable to AI.

Now let’s talk about why AI might not recognize your company as a legitimate entity in the first place.

An entity, in AI’s understanding, is a thing that can be distinctly identified. A person. A company. A product. A concept. Not just a keyword, but a unified identity that exists across multiple sources.

How AI Builds Entity Understanding:

When someone asks ChatGPT “What is the best enterprise CRM platform?”, the AI does not just look at keywords. It looks at entities. Salesforce is an entity. HubSpot is an entity. Microsoft Dynamics is an entity.

How does AI know these are real, trustworthy entities?

Consistent mentions across authoritative sources. Wikipedia entries. News coverage in reputable publications. Analyst reports from Gartner or Forrester. LinkedIn company pages with verified information. Crunchbase profiles with funding data. Industry conference websites list them as sponsors or speakers.

These external signals tell AI: this company is real, established, and recognized in its industry.

The Fragmentation Problem:

Most companies do not have unified entities. They have fragments.

Your website calls you “ABC Marketing Solutions.” Your LinkedIn page says “ABC Marketing Solutions, Inc.” Your Crunchbase profile says “ABC Marketing.” Your Twitter handle is @ABCMarketingSol because the full name was taken. Your press releases use “ABC Solutions.”

To a human, these obviously refer to the same company. To AI, these might be separate entities or poorly-defined fragments of an entity that it cannot confidently unify.

When AI cannot unify your entity, it cannot build trust. When it cannot build trust, it neither cites you nor recommends you.

How to Build a Unified Entity:

Step 1: Standardize Your Brand Name.

Pick one official name. Use it everywhere. Website, LinkedIn, Crunchbase, press releases, social media profiles, schema markup, author bylines, everywhere.

Not “ABC Marketing” on one platform and “ABC Marketing Solutions, Inc.” on another. One name. Consistently.

Step 2: Implement Organization Schema.

Add an Organization schema to your website to explicitly tell AI how your properties connect.

{

"@context": "https://schema.org",

"@type": "Organization",

"name": "ABC Marketing Solutions",

"url": "https://www.abcmarketing.com",

"logo": "https://www.abcmarketing.com/logo.png",

"sameAs": [

"https://www.linkedin.com/company/abc-marketing-solutions",

"https://twitter.com/abcmarketing",

"https://www.facebook.com/abcmarketing",

"https://www.crunchbase.com/organization/abc-marketing-solutions"

]

}The sameAs property tells AI: all of these profiles belong to the same entity. This is our official LinkedIn. This is our official Twitter. These are not separate companies with similar names.

Step 3: Build External Validation.

Get mentioned in authoritative third-party sources. Industry publications. News coverage. Analyst reports. Conference speaker lists. Podcast guest appearances.

Each mention reinforces that you are a real, recognized entity in your field.

According to research analyzing over one million AI citations, 95% of links cited by generative AI come from non-paid sources. 82% come specifically from earned media.

When AI sees your company mentioned across multiple credible sources (not just on your own website), it builds confidence that you are a legitimate, authoritative entity.

Step 4: Claim and Optimize Your Knowledge Graph Properties.

LinkedIn company page. Crunchbase profile. Wikipedia entry (if you qualify). Industry directories. Any platform where your entity information can be verified and displayed publicly.

The more places AI can verify your existence and authority, the stronger your entity becomes.

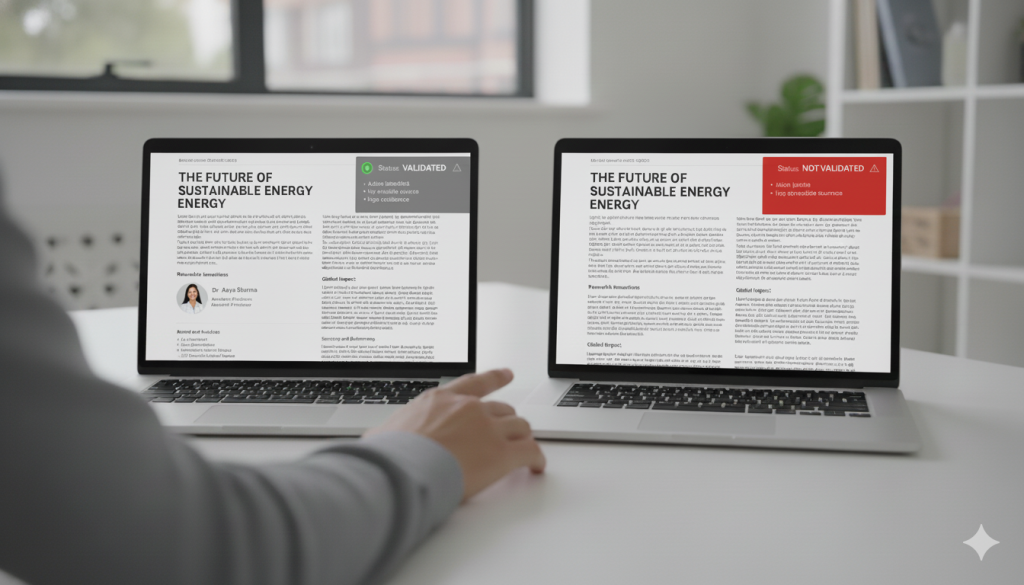

Author Authority: Real Humans vs. Anonymous Content.

Here is where most content strategies completely fall apart.

Who wrote your content?

If the answer is “Marketing Team” or “Admin” or no author listed at all, you have a credibility problem.

AI looks for real people with real credentials writing content. Not anonymous voices. Not corporate entities. Actual humans who can be verified as experts.

Why Author Attribution Matters:

When Google evaluates content quality, one signal it looks for is: who wrote this, and are they qualified to write about this topic?

A medical article written by “Dr. Sarah Chen, MD, Board Certified in Internal Medicine, 15 years of clinical experience” is trusted more than the same article written by “Health Team.”

A technical SEO guide written by “John Martinez, former Technical SEO Lead at three Fortune 500 companies, speaker at SearchLove and MozCon” is trusted more than the same guide written by “Marketing Department.”

Building Author Entities:

Just like companies need to be recognized entities, individual authors need to be recognized entities.

Step 1: Real Names, Real Photos, Real Bios.

Every piece of content needs a real author name, a real photo, and a real bio that demonstrates relevant credentials.

Not: “Sarah is a passionate marketer who loves helping businesses grow.”

But: “Sarah Chen has led content strategy for seven B2B SaaS companies, specializing in technical documentation for enterprise software. She has published over 200 articles on API integration, developer experience, and technical writing best practices.”

Step 2: Author Schema Markup.

Implement the Person schema, linking each author to their credentials and external profiles.

{

"@type": "Person",

"@id": "https://yoursite.com/authors/sarah-chen",

"name": "Sarah Chen",

"url": "https://yoursite.com/authors/sarah-chen",

"image": "https://yoursite.com/images/sarah-chen.jpg",

"jobTitle": "Director of Content Strategy",

"worksFor": {

"@type": "Organization",

"name": "ABC Marketing Solutions"

},

"sameAs": [

"https://www.linkedin.com/in/sarahchen",

"https://twitter.com/sarahchen"

]

}This tells AI: Sarah Chen is a real person. Here is her photo. Here are her credentials. Here is her LinkedIn profile, where you can verify she actually works here and has the experience she claims.

Step 3: Consistent Authorship Across Platforms.

If Sarah writes on your company blog, she should also publish on LinkedIn under the same name. Guest post on industry sites under the same name. Speak at conferences listed under the same name. Appear on podcasts under the same name.

Every external mention reinforces Sarah’s entity as a recognized expert in her field.

Step 4: Topical Authority.

One author should not write about everything. Sarah writes about content strategy, technical documentation, and developer marketing. She does not write about accounting, legal compliance, or industrial manufacturing.

Focused expertise builds stronger entity authority than scattered generalist content.

The YMYL Factor: When Credentials Are Mandatory.

Some topics require extremely high E-E-A-T standards. Google calls these YMYL topics: Your Money or Your Life.

If your content could impact someone’s health, financial security, safety, or significant life decisions, AI holds it to a much higher standard.

YMYL Topics Include:

Medical and health information. Financial advice and planning. Legal guidance. Safety information. News and current events (especially politics, business, science).

If Your Content Is YMYL:

Author credentials are not optional. They are mandatory.

Medical content must be written or reviewed by licensed medical professionals (MD, DO, RN, etc.). Financial advice must come from certified professionals (CFP, CFA, etc.). Legal guidance must come from licensed attorneys.

You must display these credentials prominently. Not buried in a bio. Front and center.

You must cite authoritative sources. Medical journals. Legal precedents. Government data. Peer-reviewed research.

You must include clear disclaimers. “This information is for educational purposes and does not constitute medical advice. Consult your physician before making health decisions.”

If You Are Not Qualified to Write YMYL Content:

Do not write it. Or interview actual experts, give them byline credit, and have them review every word before publication.

AI is increasingly good at detecting when non-experts write about YMYL topics. And it filters that content aggressively because the stakes are high.

The Knowledge Paradox: Why AI Expertise Without Domain Knowledge Is Worthless.

Let me address the most dangerous myth in content marketing right now: “AI can write our content, so we do not need subject matter experts anymore.”

No. AI amplifies what you already know. It does not replace what you do not know.

Picture two professionals using ChatGPT to solve the same problem:

- Person A is a prompt engineering expert. She knows every technique. Chain-of-thought reasoning. Few-shot learning. Role-based prompts. She can craft sophisticated prompts that extract maximum value from AI.

But she has zero domain knowledge in the subject she is writing about.

- Person B is a domain expert with 20 years of hands-on experience in the field. She knows the nuances, the edge cases, the mistakes beginners make, the strategies that actually work in practice.

But she has basic AI skills. She can write a simple prompt, but nothing sophisticated.

Who produces better content?

Person B. Every single time.

Because Person B knows which questions to ask, which details matter, which misconceptions to address, and which real-world context to include, she can spot when AI provides generic or incorrect information. She can add depth, nuance, and specific examples that only come from experience.

Person A can write beautiful prompts that extract grammatically correct, well-structured content from AI. But it will be surface-level, generic, and indistinguishable from thousands of other AI-generated articles on the same topic.

This is the knowledge paradox:

Companies think AI eliminates the need for expertise. They think prompt engineering is the new skill that matters.

But AI systems are getting better at detecting content written by people without domain knowledge. The generic phrasing. The lack of specific examples. The absence of practitioner insights. The obvious gaps where deep knowledge should be.

And they filter it out.

The Only Sustainable Strategy:

Domain experts using AI as a drafting tool, not a replacement for expertise.

Let the expert outline the content. Let AI draft sections based on that outline. Then let the expert heavily edit, add specific examples, correct inaccuracies, and inject insights that only come from experience.

The result is content that has the efficiency of AI assistance combined with the depth and credibility of real expertise.

That is what AI rewards. That is what gets cited, indexed, and ranked.

Practical E-E-A-T Implementation: What to Do This Week.

Stop theorizing. Start building.

On Your Website:

Add real author names and photos to every article. Create detailed author bio pages with credentials, years of experience, and specific areas of expertise. Implement the Author and Person schema on every byline. Link author profiles to LinkedIn, Twitter, and industry directories. Display credentials prominently: certifications, education, clients served, years in the field.

Beyond Your Website:

Get quoted in industry publications. Pitch yourself as an expert source to journalists covering your field. Speak at industry conferences. Even virtual events build entity recognition. Appear on podcasts as a guest expert. Contribute guest posts to authoritative sites in your industry. Participate in industry forums and communities using your real name and credentials.

Content Quality Over Quantity:

Audit your existing content. How much of it could only have been written by someone with real expertise? How much is generic filler anyone could write?

Delete or consolidate thin content. Seriously. Fewer high-quality pages outperform many low-quality pages.

Invest in subject matter experts who write or heavily edit your content. This is not optional anymore.

Add depth to every piece. Case studies. Specific examples. Data from your experience. Insights that only practitioners would know.

Build External Validation:

Press releases alone do not count. Get actual news coverage in real publications.

Earn backlinks from authoritative sites by creating content worth citing.

Build relationships with industry influencers and thought leaders. Not for backlinks. For genuine collaboration and mutual citation.

Measure What Matters:

Track brand searches in Google Analytics. Are people searching for your company name? Track citations in AI-generated answers. Search for topics you cover in ChatGPT, Perplexity, and Google AI Overviews. Do they mention you? Track backlinks from authoritative sources. Quality over quantity. Track featured snippets and “People Also Ask” appearances. These signal authority.

The AI Content Dilemma: Can You Use AI at All?

Let me be clear: Yes, you can use AI to help create content. But only if you do it right.

What Works:

AI as a drafting tool for expert writers. The expert outlines the piece. AI drafts sections. The expert heavily edits, adds depth, and corrects errors.

AI for research assistance. Gathering background information, summarizing studies, and identifying gaps in coverage.

AI for ideation. Brainstorming angles, questions to address, and structures to try.

What Does Not Work:

Publishing unedited AI content. This is instantly detectable and gets filtered.

Using AI to write about topics you have no expertise in. Garbage input equals garbage output.

Scaling content production without quality control. More content does not equal better results if the content lacks expertise.

Using fake author names or listing “AI-generated by [Tool].” This destroys credibility.

The Detection Arms Race:

AI detection tools are improving rapidly. More importantly, AI systems are getting better at detecting content that lacks real expertise.

Google does not penalize AI content per se. Google penalizes unhelpful, low-quality content. Which AI often produces when used without expert oversight.

If you cannot add genuine expertise to AI-generated content, do not publish it.

The Human Layer AI Cannot Replace:

Here is the uncomfortable truth that most companies do not want to face:

The era of cheap, scaled, anonymous content is over.

You cannot game E-E-A-T with technical tricks. You cannot fake expertise with schema markup. You cannot manufacture authority with purchased backlinks.

You actually have to be credible. You actually have to employ real experts. You actually have to demonstrate first-hand experience that AI can verify across multiple sources.

This is harder. This is more expensive. This requires real investment in people who know what they are talking about.

But it is also the only sustainable strategy in the age of AI-powered search.

Because AI is getting better at detecting authentic expertise, the content mills are being filtered out. The anonymous authors are being deprioritized. The thin, generic, AI-generated-at-scale content is disappearing from results.

What remains are real humans with real credentials writing real insights from real experience.

That is what AI cites. That is what Google indexes. That is what users trust.

Build the human layer. Let AI amplify what you already know.

If you are wondering where your E-E-A-T signals stand or would like a second opinion on your content strategy, author credibility, or entity building, consider consulting your trusted content strategist or SEO expert for a review. If you do not know anyone, feel free to reach out. I am happy to take a look. Sometimes the best insights come from a conversation, not a blog post.

What This Means: A Quick Guide.

- E-E-A-T: Experience, Expertise, Authoritativeness, and Trustworthiness. Google’s framework for evaluating content quality. Why it matters: AI models trained on these guidelines now detect E-E-A-T signals automatically when deciding what to cite and recommend.

- Entity: A thing AI recognizes as distinct and real (person, company, product, concept). Not just a keyword, but a unified identity across the web. Why it matters: If AI cannot recognize you as a legitimate entity, it cannot trust or recommend you.

- Thin Content: Content with insufficient depth, no unique insights, no first-hand experience, or no demonstrated expertise. Why it matters: Google often excludes thin content from indexing entirely, and AI never cites it.

- Author Schema / Person Schema: Structured data markup that tells AI who wrote the content and links to their credentials and external profiles. Why it matters: Helps AI verify that real, qualified experts wrote your content.

- Organization Schema: Structured data that defines your company as an entity and connects all your official properties (website, LinkedIn, social profiles). Why it matters: Unifies fragmented mentions of your brand so AI recognizes them as the same entity.

- YMYL (Your Money or Your Life): Content topics that could impact health, financial security, safety, or major life decisions. Why it matters: AI holds YMYL content to much higher E-E-A-T standards and requires verified expert credentials.

- Domain Expertise: Deep, practical knowledge in a specific field gained through hands-on experience. Why it matters: AI amplifies expertise but cannot create it; content without domain knowledge gets filtered out.

- Earned Media: Coverage, mentions, or citations in third-party publications not paid for or controlled by you. Why it matters: 95% of AI citations come from earned media sources; AI trusts external validation over owned content.

- Entity Fragmentation: When AI sees your company as multiple separate entities due to inconsistent branding across platforms. Why it matters: Fragments your authority signals and reduces AI’s confidence in recommending you.

- Knowledge Graph: Google’s database of entities and their relationships used to understand search queries and generate results. Why it matters: Being recognized in the Knowledge Graph signals strong entity authority to AI systems.

- First-Hand Experience: Content based on direct, personal involvement with the topic rather than research or second-hand information. Why it matters: AI detects and rewards experience markers like “I tested,” “In my 15 years,” and specific practitioner insights.

- Information Density: The amount of unique, valuable information per word in your content. Why it matters: AI evaluates whether content provides substantive value or just fills space with generic statements.

Now It’s Your Turn:

The systems are already deciding. Every piece of content you publish is being evaluated not just for keywords or backlinks, but for signals of genuine expertise that AI can verify across multiple independent sources.

Your anonymous bylines, your thin 200-word pages, your AI-generated content with no human editing, AI sees all of it. And it is filtering you out while you wonder why your rankings collapsed.

As you think about your own E-E-A-T signals and content strategy, consider:

- If every author byline on your site said “AI Assistant” instead of a human name, would your content be treated any differently by AI than it is right now?

- What percentage of your content actually requires domain expertise to write? Be honest. How much could a college intern with ChatGPT replicate in 30 minutes?

- When was the last time you published something that could ONLY have been written by someone who actually did the work, not just researched it?

- If your competitors hired your entire content team tomorrow, could AI tell the difference between your content and theirs?

These are not rhetorical questions. They are diagnostic ones.

And if the answers make you uncomfortable, that is not a bad thing. Discomfort is the first step toward building what actually works.

I would love to hear your thoughts.

Next Week: The Human Layer on AI Copy, When to Edit, When to Delete, When to Start Over.

Your E-E-A-T signals can be strong. Your authors can have real credentials. Your entity can be well-established.

But if your content sounds like a robot wrote it, lacks personality and voice, and reads like every other AI-generated article on the same topic, users will bounce, and AI will deprioritize it.

Next week, we tackle the final piece: adding the human layer to AI-generated content, and how to edit AI drafts so they sound human. When to delete sections that add no value. When the AI output is so generic that starting over from scratch is faster than editing.

Because AI can draft, but only humans can make content worth reading.

What percentage of your “AI-assisted” content is actually just lightly edited AI output with no real human insight added?

Until then, add real author names to your content. Build your author bio pages with credentials. Start contributing to external publications to build entity recognition.

Build the base. Let AI amplify what works.