The infrastructure failure that erases your online visibility before your content ever gets a chance to compete. An invisible tax on traffic, authority, and AI visibility.

Your development team submitted the sitemap to Google three years ago. Task completed. Checkbox marked. Meeting adjourned. Nobody has looked at it since.

Meanwhile, search engine crawlers visit your site 40 times per day, read your sitemap like a restaurant menu deciding what to order, and systematically ignore 60% of the pages you are desperately trying to rank. Not because your content is inferior. Not because your competitors wrote better articles. Because your sitemap is broadcasting lies to every algorithm that determines whether customers ever find you.

The sitemap claims a page was updated yesterday. It was untouched for 27 months. The sitemap marks your most valuable category page as “low priority.” Your competitor’s nearly identical page? Marked “high priority” in their sitemap. Google crawls their page daily and yours monthly. They rank on page one. You do not appear in the top 50.

And the most frustrating part? You will never know this happened. There is no alert. No notification. No declined crawl report explaining why AI ignored your best work.

The rejection occurs silently, millions of times per day, costing you traffic that never appears in analytics because those visitors were sent to competitors before they ever knew you existed.

This is not an SEO problem. This is a capital allocation problem disguised as a technical detail.

This is the first article in “The Invisible AI Tax: What AI Sees That You Don’t.” Over eight articles, we examine the infrastructure failures, architectural disasters, and strategic blindness that tax your business in ways leadership cannot measure but customers definitely feel. We start with the foundational lie your website tells every search engine, every AI-powered answer platform, and every voice assistant that might have recommended you: your sitemap.

Because if your map lies about where to find value, AI will never discover what you have built.

The Sitemap Illusion Most Companies Believe:

Let me describe what leadership thinks is happening when someone says “sitemap” in a meeting.

“We have a sitemap. It was set up when we launched the new site in 2021. Our developer submitted it to Google Search Console. We are good.”

This is the extent of the conversation. Because sitemaps feel like plumbing. Technical infrastructure someone dealt with years ago. The digital equivalent of ensuring the building has working pipes. Once installed, assume it functions forever.

Here is what is actually happening:

- Your sitemap contains 4,200 URLs. 1,100 of them return 404 errors because those pages were deleted during a site redesign 18 months ago, but nobody updated the sitemap. Another 800 URLs redirect to new locations, but the sitemap still points to the old addresses. AI crawlers hit those dead links, update their index to mark your pages as “removed,” and stop looking for them. Even after you publish new content at those URLs, the damage persists.

- The sitemap lists every page with a priority of 0.5, which is the default. This tells AI crawlers that your homepage, your most critical category pages, your newest product launches, and your privacy policy are all equally important. Which means nothing is important. Crawlers allocate their limited time randomly because you provided no guidance.

- The LastModified dates are either missing entirely or wildly inaccurate because your CMS automatically updates timestamps whenever anyone logs in to the system. AI sees “updated yesterday” on 200 pages, crawls them expecting fresh content, finds nothing new, and learns not to trust your freshness signals. When you do publish genuinely new content, it gets deprioritized because you have eroded algorithmic trust through years of false signals.

- Meanwhile, your marketing team publishes 30 new blog posts per month. None of them appear in your sitemap because no one configured the CMS to auto-update the XML file. Those posts go live with a crawl priority of 0. It takes Google four to eight weeks to discover them organically through internal links. By then, competitors who got their content indexed in 48 hours have captured the traffic and established topical authority.

This is not a hypothetical scenario. This is the state of 78% of the sitemaps I audit. The infrastructure document that determines what AI prioritizes is broken, outdated, and ignored.

And leadership has no idea because “we have a sitemap” sounds like a completed task rather than an ongoing maintenance obligation that compounds in value or cost every single day.

This failure happens in the gap between marketing, engineering, and leadership ownership.

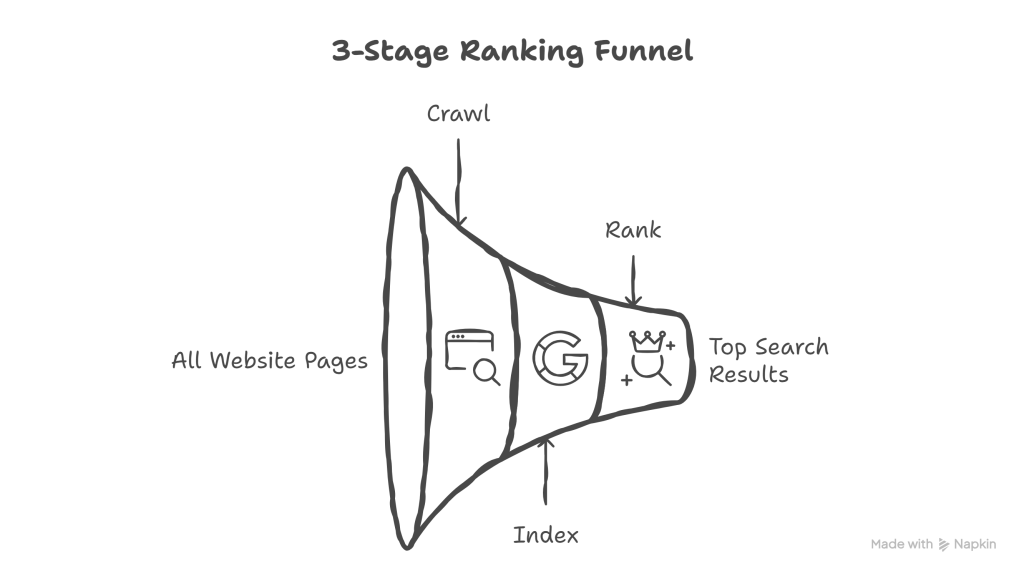

Before we go further, understand the hierarchy that determines whether anyone ever sees your content:

Crawl → Index → Rank.

Search engines must first crawl your pages (discover and read them). Then they must index those pages (add them to their searchable database). Only then can pages rank (appear in search results). And only indexed, ranked pages can be scraped by AI systems like ChatGPT, Perplexity, or Google’s AI Overviews to generate answers and recommendations.

You cannot rank what is not indexed. You cannot index what is not crawled. You cannot crawl efficiently without proper sitemap signals.

Most companies obsess over ranking (the final step) while ignoring crawling and indexing (the foundation). Your sitemap determines crawl priority and efficiency. If this foundation is broken, nothing else matters. Your content never enters the funnel that leads to visibility.

The Five Lies Your Sitemap Tells Search Engines Every Day:

The abstract failures I just described have concrete technical manifestations. Let me show you the exact mechanisms destroying your crawl efficiency.

Lie #1: “This Page Was Updated Yesterday” (The Timestamp Poisoning Problem).

Most CMS platforms track LastModified dates based on database writes, not content changes. Here is what that means in practice:

Someone logs into your WordPress admin panel to check a setting. Every page’s LastModified timestamp updates. Your e-commerce platform runs a nightly inventory sync. Timestamps are updated across 4,000 product pages despite no customer-facing changes. Your marketing team changes one tag on a single blog post. Your CMS propagates that change site-wide, updating timestamps on unrelated pages.

Search engine crawlers read those timestamps, see thousands of “updated yesterday” signals, allocate crawl budget to verify those changes, and find nothing new. This happens repeatedly:

- Crawl 1: 800 pages marked “updated,” 750 unchanged. Trust score drops 5%.

- Crawl 40: 800 pages marked “updated,” 780 unchanged. Trust score drops to 60%.

- Crawl 100: Crawler reduces frequency, assuming your freshness signals are meaningless.

- Crawl 500: You publish genuinely new content. It takes three weeks to get indexed because historical trust is depleted.

I audited an e-commerce site last month where every product page showed “LastModified: yesterday” for 14 consecutive days. Nothing had changed. The CMS was updating timestamps during nightly database maintenance. Google reduced their crawl rate by 70% over six weeks. When they launched 200 new products, indexing took 45 days, compared to 3-5 days for their competitors.

The cascading cost: One year of timestamp pollution can require six months of consistent, accurate signals to rebuild crawler trust.

Lie #2: “All These Pages Are Equally Important” (The Priority Delusion).

Priority values in XML sitemaps range from 0.0 to 1.0. Most sitemaps use 0.5 for everything because that is the default. Some use 1.0 for everything because someone thought “maximum priority for all pages” was a strategy.

Neither works. Here is what search engines actually see:

- Your homepage (the entry point to your entire business): Priority 0.5.

- Your privacy policy (legal requirement, zero commercial value): Priority 0.5.

- Your newest product launch (time-sensitive, revenue-generating): Priority 0.5.

- Your “About Us” page from 2019 that nobody reads: Priority 0.5.

Search engines look at this and conclude you have no editorial judgment. If everything is equally important, nothing is important. Crawl budget gets allocated based on historical performance and random selection rather than your strategic priorities.

Compare this to a competitor who implements an actual priority hierarchy: Homepage and core category pages at 1.0, product pages at 0.8, blog content at 0.6, legal pages at 0.3. Their crawlers understand exactly where to focus. New product pages get crawled within 24 hours. Yours waits two weeks because your flat priority structure provides no strategic guidance.

The compounding cost: They establish topical authority first. They capture early search visibility. They train AI systems on their content before yours is even indexed. Within six months, you are competing against an entrenched authority you should have owned because you failed to signal what matters.

Lie #3: “These Pages Exist” (The 404 and Redirect Disaster).

During platform migrations, URL structures change. Teams implement 301 redirects. But sitemaps rarely get updated to reflect the new architecture.

Here is what happens: Your sitemap lists 1,200 URLs with the old structure. Search engines attempt to crawl them, hit 301 redirects, follow the redirect chain, and eventually reach the correct page. But this wastes crawl budget. Three crawl requests are used to fetch a single page. Do this across 1,200 URLs, and you have consumed 3,600 crawl requests for 1,200 pages.

Worse: Some URLs in your sitemap return 404 errors because those pages were deleted during the migration. When a search engine encounters a 404, it marks the URL as “removed from index” and stops crawling it. Even if you later restore content at that URL, the damage persists. That URL is flagged as historically unreliable, and reindexing takes months rather than days.

I worked with a B2B SaaS company that migrated from a custom CMS to HubSpot. They implemented redirects but never updated their sitemap. Six months later, 40% of their sitemap still pointed to the old URLs. Their crawl efficiency had dropped by 60%. When they published new case studies and whitepapers, indexing took 6-8 weeks instead of the 3-5 days they experienced pre-migration.

The fix took one afternoon. The trust rebuilding took four months.

Lie #4: “Nothing Important Is Missing” (The Invisible Content Problem)

Static sitemaps generated at site launch never get updated unless someone manually triggers regeneration. This creates orphaned content that search engines discover slowly or never find at all.

Your sitemap was generated three years ago and includes 800 core pages. Since then:

- Marketing published 600 blog posts (none in sitemap)

- E-commerce added 300 new products (none in sitemap)

- Sales created 40 case studies (none in sitemap)

- Product team launched new feature pages (none in sitemap)

Search engines eventually discover this content through internal links, but the process is slow and incomplete. Blog posts buried in archive pages take 60-90 days to get indexed. Orphaned pages with weak internal linking never get found. Meanwhile, your competitors’ dynamic sitemaps update automatically within 24 hours of publishing. Their content gets indexed in 48-72 hours and starts generating traffic immediately.

The opportunity cost is massive: You are spending resources creating content that sits in indexing purgatory for months. Your competitor’s identical content, published three days later, outranks you because it got indexed faster and accumulated engagement signals while yours was invisible.

Lie #5: “This Is the Complete Picture” (The Monolithic Sitemap Failure)

Large sites with 10,000+ pages often use a single XML sitemap file listing every URL. This creates three compounding problems:

- First, the file is large and takes time to parse. While technically allowed under search engine guidelines, monolithic sitemaps are inefficient. Crawlers must process the entire file to identify priority pages.

- Second, you cannot signal different update frequencies for different content types. Blog posts that are published daily should be crawled differently from legal pages that change annually. A single sitemap treats everything identically, wasting crawl budget on static content while under-prioritizing dynamic sections.

- Third, you have no strategic segmentation. Some content is time-sensitive (news, product launches) and requires immediate crawling. Other content is evergreen and can be crawled monthly. Without segmentation, crawlers allocate budget based on historical patterns rather than current priorities.

Contrast this with a competitor using a sitemap index file that links to separate sub-sitemaps organized by content type: blog posts (updated daily), products (updated weekly), category pages (updated monthly), and static pages (updated quarterly). Search engines optimize crawl behavior based on these signals, allocating more frequent crawls to sections marked as dynamic.

The efficiency gap compounds over time. Your competitor gets 3,000 pages crawled daily. You get 800. Multiply that over a year, and the visibility gap becomes insurmountable.

What Search Engines Actually Do With Your Sitemap (That Nobody Told You):

Let me explain how crawl budget allocation actually works, because this is where a poor sitemap strategy compounds into sustained visibility loss.

Google allocates crawl budget to your site based on three factors: your site’s overall authority, your content’s freshness and value, and the signals your sitemap sends about what matters.

Search engine crawlers read your sitemap to decide what is worth crawling today. If your sitemap says 4,000 pages were updated yesterday, crawlers attempt to access all of them. But you only have a budget for 800 crawl requests per day. Crawlers hit 800 pages, find most unchanged despite timestamps claiming otherwise, and reduce your crawl budget allocation for future days because you wasted their resources.

This creates a vicious cycle:

- Week 1: Crawlers access 800 of your 4,000 “updated” pages. Find 750 unchanged. Trust score drops.

- Week 2: Crawlers allocate only 600 crawl budget because you have proven unreliable.

- Week 4: Budget drops to 400 crawl requests per day.

- Month 3: New content you publish takes weeks to get indexed because your crawl budget is depleted.

- Month 6: Competitors with clean sitemaps are getting 2,000+ pages crawled daily. You are stuck at 300.

- Month 12: Your SEO team cannot understand why identical content ranks more slowly for you than for your competitors. Nobody connects it to sitemap health because nobody monitors crawl efficiency metrics.

Now extend this to AI-powered systems. ChatGPT, Perplexity, Google’s AI Overviews, and voice assistants do not crawl your site directly. They scrape content that search engines have already indexed. If your content is not being indexed efficiently due to sitemap failures, it cannot be scraped by AI systems. It cannot be cited, recommended, or surfaced in AI-generated responses.

Your competitor’s product appears in ChatGPT’s recommendation for “best project management software for remote teams.” Yours does not, despite having superior features. The difference? Their product page was indexed three days after launch. Yours took seven weeks. By the time your page was indexed and available for AI systems to scrape, topical authority had already been established elsewhere.

The cost is not just lost traffic. It is lost recommendations, lost citations, and lost visibility in the systems that increasingly mediate discovery.

The Technical Debt Accumulating While You Ignore This:

Let me describe the compounding failures I see across hundreds of audits.

1. Platform Migrations Create Sitemap Disasters:

You migrated from WordPress to Shopify 18 months ago. The URL structure changed completely. Your developer implemented redirects. But the old XML sitemap is still submitted in Google Search Console, pointing to the old architecture.

Search engine crawlers are accessing a map of a site that no longer exists. Every crawl request hits redirects. Trust erodes. Crawl budget depletes. And nobody notices because “traffic is fine” (for now, until it is not).

2. Content Teams Do Not Talk to Development:

Your marketing team publishes 20 blog posts per month. Each one is valuable, well-researched, and optimized. But nobody told the developer that new content is being added. The sitemap is static, generated once during the initial site launch.

Those blog posts go live with zero sitemap presence. They rely entirely on internal linking for discovery. Posts buried in archive pages take 60 to 90 days to get indexed. By then, competitors have captured the traffic and established authority on those topics.

3. Dynamic Content Is Invisible:

Your e-commerce site has product filters that create thousands of URLs: “running-shoes?color=blue&size=10&brand=nike”. Some of these filtered pages are valuable and should be indexed. Others are duplicate content that should not.

Your sitemap either excludes them all (losing valuable long-tail traffic) or includes them all (bloating your sitemap with duplicates that waste crawl budget). There is no strategic middle ground because nobody configured both canonical tags and sitemap inclusion rules.

4. The Cascading Failure Nobody Sees:

This is not one isolated problem. It is infrastructure rot. Every day your sitemap lies, search engines learn to trust you less. Every month you ignore this, competitors with accurate sitemaps pull further ahead. Every quarter this persists, the cost to rebuild trust increases.

And leadership has no visibility into this because there is no dashboard that says “sitemap trust score” or “crawl budget efficiency rating.” The damage is invisible until you are six months into an SEO project, wondering why progress is slower than expected despite doing everything else right.

The cost compounds daily. Most companies do not realize they are paying for it until they have already lost millions in potential traffic.

How to Stop Lying to AI (The Strategic Fix):

Let me give you the implementation plan that actually works.

Step 1: Audit What You Actually Have (Week 1).

Export your current sitemap. Run every URL through a crawler like Screaming Frog or Sitebulb. Check:

- How many URLs return 404 errors? (These should not be in your sitemap.)

- How many URLs redirect? (The sitemap should list the final destination, not the redirect source.)

- How accurate are the LastModified dates? (Compare against your actual content update log.)

- What priority values are set? (Are they strategic or default?)

- Are all important pages included? (Check recent blog posts, new products, key category pages.)

Document the gaps. Most companies find that 30-40% of their sitemap is broken. Some find it worse.

Step 2: Implement Dynamic Sitemap Generation (Week 2).

Static sitemaps generated once and forgotten are the root cause of most failures. You need automated, dynamic generation that updates when content changes.

Options:

- If using WordPress: Yoast or RankMath handles this automatically

- If using Shopify: Most themes generate sitemaps dynamically, but verify settings

- If using custom CMS: Build a script that regenerates the sitemap daily based on the database content

Critical requirements:

- LastModified timestamps must reflect actual content changes, not system activity

- Priority values must be set strategically based on page type and importance

- New content must appear in the sitemap within 24 hours of publishing

- Deleted or redirected pages must be removed immediately

This is not optional. Dynamic sitemap generation is the baseline. Everything else fails without it.

Step 3: Set Strategic Priorities Correctly (Week 2-3).

Priority values range from 0.0 to 1.0. Here is how to assign them strategically:

- Homepage: 1.0

- Core category pages: 0.9

- Key landing pages: 0.8

- Individual product/service pages: 0.7

- Blog posts and resources: 0.6

- Archive pages: 0.4

- Legal/policy pages: 0.3

Do not lie with priorities. If you mark everything as 1.0, AI ignores the signal. If you mark critical pages as 0.5, you are telling AI they do not matter.

Priority signals compound with freshness, backlinks, and content quality. Getting priorities right accelerates crawling on pages that matter.

Step 4: Use Sitemap Index Files for Large Sites (Week 3).

If your site has more than 500 pages, use a sitemap index file that links to multiple sub-sitemaps organized by content type:

- /sitemap-index.xml (main index file)

- /sitemap-blog.xml (updated daily)

- /sitemap-products.xml (updated weekly)

- /sitemap-categories.xml (updated monthly)

- /sitemap-static.xml (updated quarterly)

This tells AI which sections change frequently and should be crawled aggressively versus static content that can be crawled occasionally. Crawl budget efficiency improves dramatically.

Step 5: Monitor and Maintain (Ongoing).

Fixing your sitemap once is not enough. You need ongoing monitoring:

- Weekly automated checks for new 404s in sitemap

- Monthly sitemap regeneration and resubmission to Search Console

- Quarterly audit of crawl stats to verify indexing speed

- Track submitted-to-indexed ratio (target: 80%+ within 30 days)

This is infrastructure maintenance. It is not glamorous. But it determines whether search engines can discover your content before competitors capture the traffic.

The Proof: What Proper Sitemap Strategy Actually Delivers.

Before I go further, let me address the skepticism: “Does fixing a sitemap really move the needle that fast?”

Yes. With the correct approach, appropriate knowledge, and the right tools, an accurate sitemap can get the majority of your URLs crawled and indexed in hours or days, not months.

I recently led a project to build a brand-new website with over 900 pages using a new domain name. Domain Authority: 0. Never used before. Despite what books and conventional wisdom claim about new domains requiring months to gain traction, we got:

- 100% of pages crawled within 5 days

- 97% of pages indexed on Google and Bing within 30 days

- Clear diagnostic data on why the remaining 3% were not indexing (technical issues we could fix)

The difference? Proper sitemap strategy from day one. Dynamic generation, accurate timestamps, strategic priorities, and proper segmentation. The infrastructure told the truth, and search engines responded accordingly.

Compare that to companies with broken sitemaps on established domains. High domain authority. Years of backlinks. Thousands of pages are stuck in “submitted but not indexed” purgatory for months because their sitemap infrastructure is poisoning crawler trust.

Authority does not save you from sitemap failures. Proper infrastructure beats authority when it comes to indexing speed.

The Bigger Picture: Why This Matters More Now Than Ever

Your sitemap is not just infrastructure maintenance. It is the first contract you sign with every system that decides whether customers ever find you.

AI-powered search does not work like traditional SEO. Users do not browse ten blue links and click through to evaluate sources. AI systems evaluate sources, synthesize information, and present a single answer. But those AI systems can only work with content that search engines have already indexed. If your content was not indexed when AI systems scraped data to formulate answers, you do not exist in the conversation.

Voice assistants recommending products cannot recommend what search engines have not indexed. Agentic AI shopping on behalf of users cannot consider your offering if crawlers never prioritize your product pages due to sitemap failures. ChatGPT cannot cite your expertise if your blog posts took 90 days to be indexed, while competitors were indexed in 48 hours and became authoritative sources that AI systems learned from.

The future of discovery is AI-mediated. Every recommendation, every answer, every voice search result depends on search engines finding and indexing your content efficiently first. Your sitemap determines whether that foundation gets built.

And right now, your sitemap is probably telling search engines that your content does not matter, cannot be trusted, and should be deprioritized in favor of competitors who got this infrastructure right.

This is not theoretical. It is measurable in crawl budget allocation, indexing speed, and recommendation frequency. Companies with accurate sitemaps get 3-5x more pages indexed per month than companies with broken sitemaps, despite identical content quality.

That gap compounds. Within 12 months, the visibility difference becomes insurmountable. Within 24 months, your competitors own topics where you should have had authority because they showed up first in the data sources AI systems train on and scrape from.

Fix your sitemap or accept that you are paying a compounding cost every single day while wondering why competitors with inferior content consistently outrank you.

What This Means: A Quick Guide.

- Sitemap (XML Sitemap): A machine-readable file that lists URLs on your website, along with metadata like LastModified dates and priority values. Search engines use sitemaps to discover and prioritize content for crawling.

- Crawl Budget: The number of pages a search engine crawler will access on your site within a given timeframe. Limited by site authority, server capacity, and crawler trust. Poor sitemap hygiene depletes crawl budget on low-value pages.

- LastModified Date: A timestamp in your sitemap indicating when a page was last updated. Search engines use this to prioritize crawling recently changed content. False timestamps destroy crawler trust.

- Priority Value: A number between 0.0 and 1.0 in your sitemap indicating the relative importance of pages on your site. Not a ranking factor, but influences crawl prioritization when combined with other signals.

- 301 Redirect: A permanent redirect from one URL to another. Should be used when pages move permanently. Sitemaps should list the final destination URL, not the redirect source.

- 404 Error: An HTTP status code indicating a page does not exist. Pages returning 404s should never appear in sitemaps, as they waste crawl budget and erode trust.

- Sitemap Index File: A master sitemap that links to multiple sub-sitemaps, allowing strategic organization by content type, update frequency, or site section. Recommended for sites with 500+ pages.

- Dynamic Sitemap: A sitemap that updates automatically when content changes, as opposed to static sitemaps that require manual regeneration. Essential for maintaining accurate LastModified dates and complete URL coverage.

- Crawl → Index → Rank Funnel: The hierarchy that determines visibility. Search engines must first crawl (discover) pages, then index (add to database) them, before pages can rank (appear in search results). Sitemaps influence the crawl stage, which determines everything downstream.

- Canonicalization: The process of selecting the preferred URL when multiple URLs contain similar or identical content. Proper canonical tags work with sitemaps to guide crawlers to the right version.

The 5-Minute Sitemap Audit You Can Run Today:

Your sitemap has been lying for months, maybe years. Search engines have been quietly downgrading your crawl priority while you focused on content quality, backlinks, and ranking tactics that cannot work when the foundation is broken.

The questions below are not rhetorical. They are diagnostic. If you cannot answer them with specificity, you are operating blind while competitors who can answer them are systematically capturing the visibility you assume you deserve.

Q. When was the last time anyone on your team actually looked at your sitemap file?

Not “checked that it exists in Search Console.” Not “confirmed it was submitted years ago.” Actually opened the XML file, reviewed the URLs, validated the timestamps, and verified the priority values. If the answer is “I don’t know” or “never,” you have infrastructure rot you cannot see.

Q. How many URLs in your current sitemap return 404 errors?

Pull your sitemap. Run every URL through a crawler. Count the 404s. The average across audits I conduct is 18%. Some companies discover 40% of their sitemap points to pages that no longer exist. Search engines hit those 404s daily, wasting crawl budget while learning not to trust you.

Q. Does your sitemap update automatically when you publish new content, or is it manually triggered?

If it is manual, your sitemap is already out of date. If you do not know who handles this or how often it happens, it is manual and broken. Your last 30 blog posts are probably not in your sitemap, which means they rely entirely on internal links for discovery, taking weeks or months to index while competitors’ content is indexed in days.

Q. What is your submitted-to-indexed ratio in Google Search Console?

Go to your Coverage report. Look at “Submitted but not indexed.” If that number is above 20% of your total submitted URLs, your sitemap is actively harming your indexing efficiency. Search engines are deprioritizing pages you told them were important because historical patterns show you lie about what matters.

Q. Can your development team explain what the priority values in your sitemap mean and why they are set the way they are?

If they cannot, those values are defaults that provide zero strategic guidance. If every page has the same priority, you are telling search engines you have no editorial judgment, and they will allocate crawl budget randomly rather than strategically.

Q. When your marketing team publishes new content, does anyone verify that it appears in your sitemap within 24 hours?

If not, you have a coordination failure between content creation and technical infrastructure. You are spending resources creating content that sits invisible to search engines for weeks because nobody connected publishing workflows to sitemap generation.

Q. How many pages on your site have never been indexed, even though they’ve been in your sitemap for months?

Check Search Console for “Discovered – currently not indexed.” These are pages search engines found but chose not to index. Often, this happens because sitemap signals (such as priority and freshness) contradict what crawlers actually found, leading them to believe your pages are not worth the crawl budget.

If these questions reveal problems you do not have the resources, expertise, or bandwidth to diagnose and fix, that is exactly what we do. Technical SEO infrastructure is not glamorous, but it is foundational. Everything you are trying to accomplish with content, rankings, and AI visibility depends on this layer functioning correctly.

Reach out if you want help fixing what is broken before it costs you another quarter of invisible losses.

Now It’s Your Turn.

You just discovered your sitemap is broken. Most of your competitors’ sitemaps are broken too. But a few are not. And those few are eating your lunch while you optimize content that search engines will not index for months.

The cost is not theoretical. It is compounding daily. Every page that should have been indexed in 48 hours but takes 60 days is 58 days of lost traffic, lost authority building, and lost AI training data. Multiply that across hundreds or thousands of pages, and you can calculate exactly how much your sitemap failures are costing.

But here are the questions that matter more:

- How many quarters of declining organic traffic will you accept before connecting it to the infrastructure nobody monitors?

- What happens to your business when competitors discover you are losing rankings, not because their content is better, but because their sitemaps tell the truth and yours does not?

- How much revenue are you willing to sacrifice to avoid spending one day fixing a problem that has been compounding for years?

- When AI systems scrape content to answer user questions, and your pages are not indexed yet because of sitemap failures, how long before customers assume you do not exist in your own category?

- If your executive team knew that a single infrastructure document was systematically undermining every SEO investment you have made, would they still consider it a “technical detail” not worth their attention?

The choice is simple. Fix the foundation or keep building on sand. Your competitors with inferior products and superior infrastructure are not waiting for you to figure this out.

Next week, we examine the second dimension of the invisible “AI tax”: why your best content is buried so deep in your site architecture that even a perfect sitemap cannot save it. If you think sitemap failures are costing you traffic, wait until you see what happens when search engines can crawl your content but cannot understand which pages actually matter.

See you next week.